👨🏻💻 About

I am Xuchen Li (李旭宸), a first-year Ph.D. student at Beijing Zhongguancun Academy, supervised by Prof. Weinan E (Academician of CAS), Prof. Bing Dong (Professor at PKU) and Prof. Wentao Zhang (Professor at PKU) and Institute of Automation, Chinese Academy of Sciences, supervised by Prof. Kaiqi Huang (Professor at CASIA).

Before that, I received my B.E. degree in Computer Science and Technology with overall ranking 1/449 (0.22%) at School of Computer Science from Beijing University of Posts and Telecommunications in Jun. 2024. During my time there, I was awarded China National Scholarship twice. Thank you to everyone for their support.

I am grateful to be growing up and studying with my twin brother Xuzhao Li (M.S. Student at BIT), which is a truly unique and special experience for me. I am also proud to collaborate with Dr. Shiyu Hu (Research Fellow at NTU), which has a significant impact on me.

My research focuses on Multi-modal Learning, Large Language Model and Data-centric AI. If you are interested in my work or would like to collaborate, please feel free to contact me.

🔥 News

-

2024.12: 📝 One paper has been accepted by the 50th International Conference on Acoustics, Speech, and Signal Processing (ICASSP, CCF-B Conference)!

-

2024.11: 🏆 Obtain Top Ten Classes of University of Chinese Academy of Sciences (中国科学院大学十佳班集体) as class president (only 10 classes obtain this honor of UCAS)!

-

2024.09: 📝 Two papers have been accepted by the 38th Conference on Neural Information Processing Systems (NeurIPS, CCF-A Conference)!

-

2024.08: 📣 Start my Ph.D. life at University of Chinese Academy of Sciences (UCAS), which is located in Huairou District, Beijing, near the beautiful Yanqi Lake.

-

2024.06: 👨🎓 Obtain my B.E. degree from Beijing University of Posts and Telecommunications (BUPT). I will always remember the wonderful 4 years I spent here. Thanks to all!

-

More

- 2024.06: 📝 One paper has been accepted by the 7th Chinese Conference on Pattern Recognition and Computer Vision (PRCV, CCF-C Conference)!

- 2024.05: 🏆 Obtain Beijing Outstanding Graduates (北京市优秀毕业生) (Top 5%, only 38 students obtain this honor of SCS, BUPT)!

- 2024.04: 📝 One paper has been accepted as Oral Presentation and awarded Best Paper Honorable Mention Award by the 3rd CVPR Workshop on Vision Datasets Understanding (CVPRW, CCF-A Conference Workshop, Oral Presentation, Best Paper Honorable Mention Award)!

- 2023.12: 🏆 Obtain College Scholarship of University of Chinese Academy of Sciences (中国科学院大学大学生奖学金) (only 17 students win this scholarship of CASIA)!

- 2023.12: 🏆 Obtain China National Scholarship (国家奖学金) with a rank of 1/455 (0.22%) (Top 1%, the highest honor for undergraduates in China)!

- 2023.11: 🏆 Obtain Beijing Merit Student (北京市三好学生) (Top 1%, only 36 students obtain this honor of BUPT)!

- 2023.09: 📝 One paper has been accepted by the 37th Conference on Neural Information Processing Systems (NeurIPS, CCF-A Conference)!

- 2022.12: 🏆 Obtain Huawei AI Education Base Scholarship (华为智能基座奖学金) (only 20 students win this scholarship of BUPT)!

- 2022.12: 🏆 Obtain China National Scholarship (国家奖学金) with a rank of 2/430 (0.47%) (Top 1%, the highest honor for undergraduates in China)!

💻 Experience

- Nanyang Technological University (NTU)

Research Assistant on Multi-modal Large Language Model

Advisor: Dr. Shiyu Hu and Prof. Kang Hao Cheong

2024.07 - Now - Ant Group (ANT)

Research intern on Multi-modal Large Language Model Agent

Advisor: Dr. Jian Wang and Dr. Ming Yang

2024.06 - 2024.10 - Institute of Automation, Chinese Academy of Sciences (CASIA)

Member of Artificial Intelligence Elites Class

Advisor: Prof. Kaiqi Huang

2023.05 - 2024.04 - Tsinghua University (THU)

Research Assistant on 3D Vision

Advisor: Prof. Haoqian Wang

2023.01 - 2023.05

📖 Education

- Beijing Zhongguancun Academy

Ph.D. Student of Pattern Recognition and Intelligent System

Advisor: Prof. Weinan E, Prof. Bing Dong and Prof. Wentao Zhang

2024.09 - Now - Institute of Automation, Chinese Academy of Sciences (CASIA)

Ph.D. Student of Pattern Recognition and Intelligent System

Advisor: Prof. Kaiqi Huang

2024.08 - Now - Beijing University of Posts and Telecommunications (BUPT)

Bachelor of Computer Science and Technology

Overall Ranking 1/449 (0.22%)

2020.09 - 2024.06

📝 Publications

✅ Acceptance

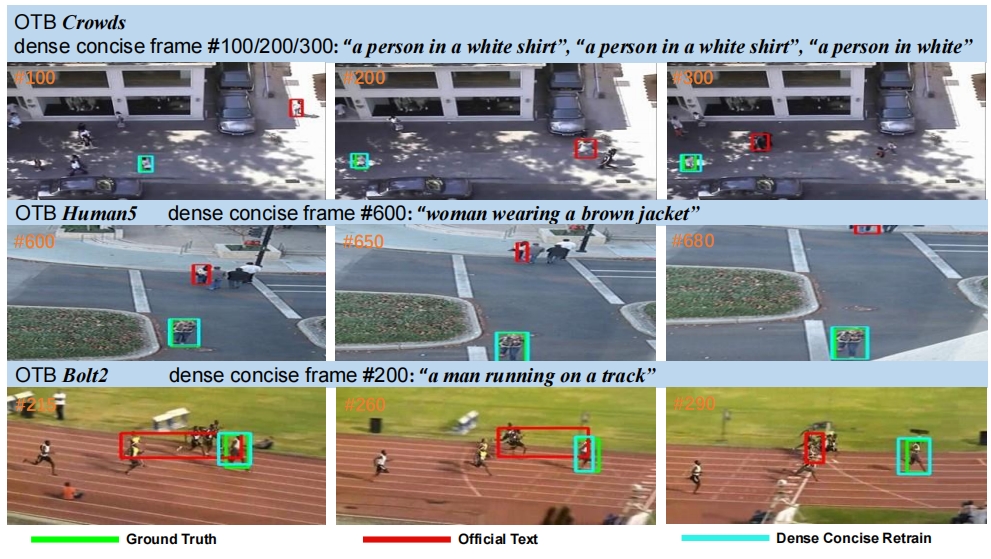

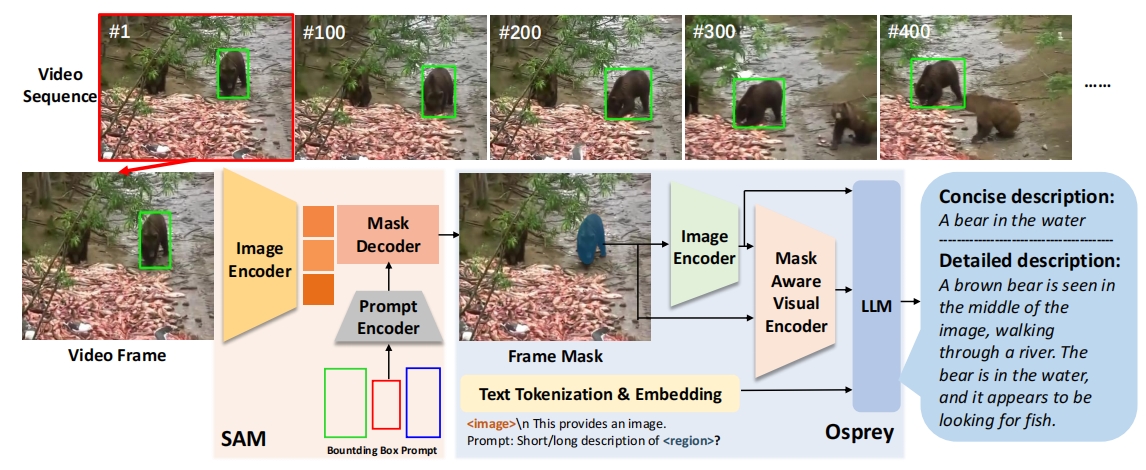

DTLLM-VLT: Diverse Text Generation for Visual Language Tracking Based on LLM

Xuchen Li, Xiaokun Feng, Shiyu Hu, Meiqi Wu, Dailing Zhang, Jing Zhang, Kaiqi Huang

CVPRW 2024 (CCF-A Conference Workshop): the 3rd CVPR Workshop on Vision Datasets Understanding

Oral Presentation, Best Paper Honorable Mention Award

[Paper]

[PDF]

[Code]

[Website]

[Award]

MemVLT: Visual-Language Tracking with Adaptive Memory-based Prompts

Xiaokun Feng, Xuchen Li, Shiyu Hu, Dailing Zhang, Meiqi Wu, Jing Zhang, Xiaotang Chen, Kaiqi Huang

NeurIPS 2024 (CCF-A Conference): the 38th Conference on Neural Information Processing Systems

[Paper]

[PDF]

[Code]

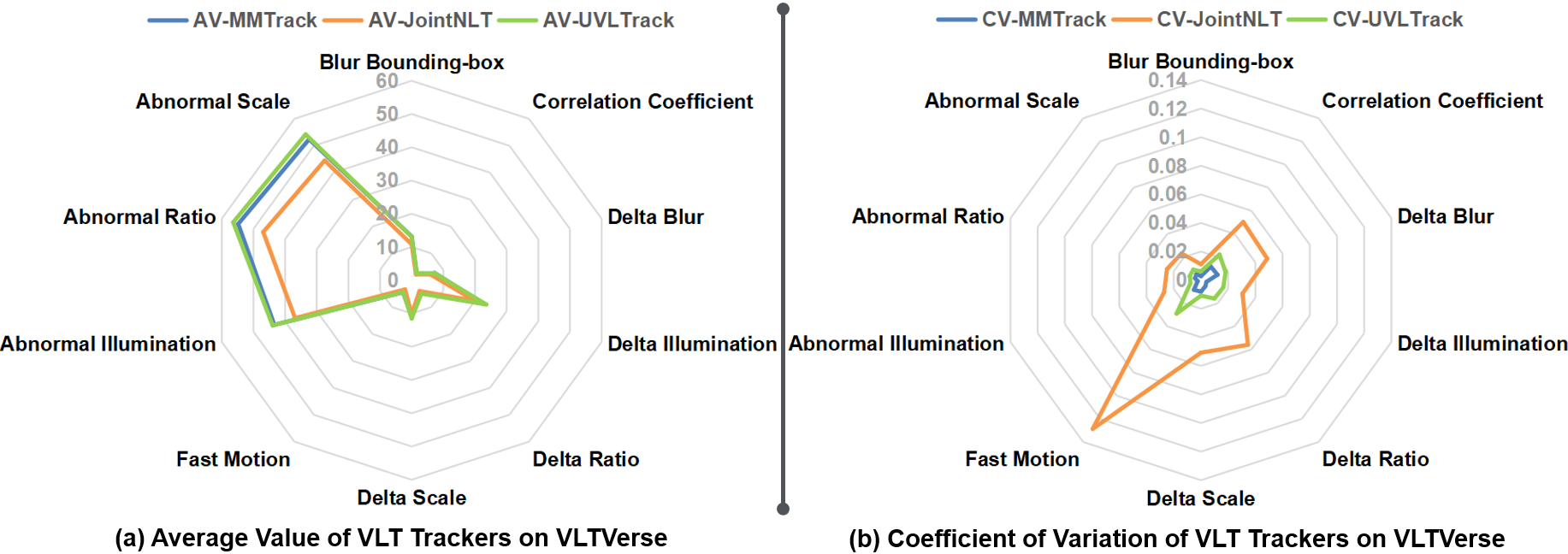

Beyond Accuracy: Tracking more like Human through Visual Search

Dailing Zhang, Shiyu Hu, Xiaokun Feng, Xuchen Li, Meiqi Wu, Jing Zhang, Kaiqi Huang

NeurIPS 2024 (CCF-A Conference): the 38th Conference on Neural Information Processing Systems

[Paper]

[PDF]

[Code]

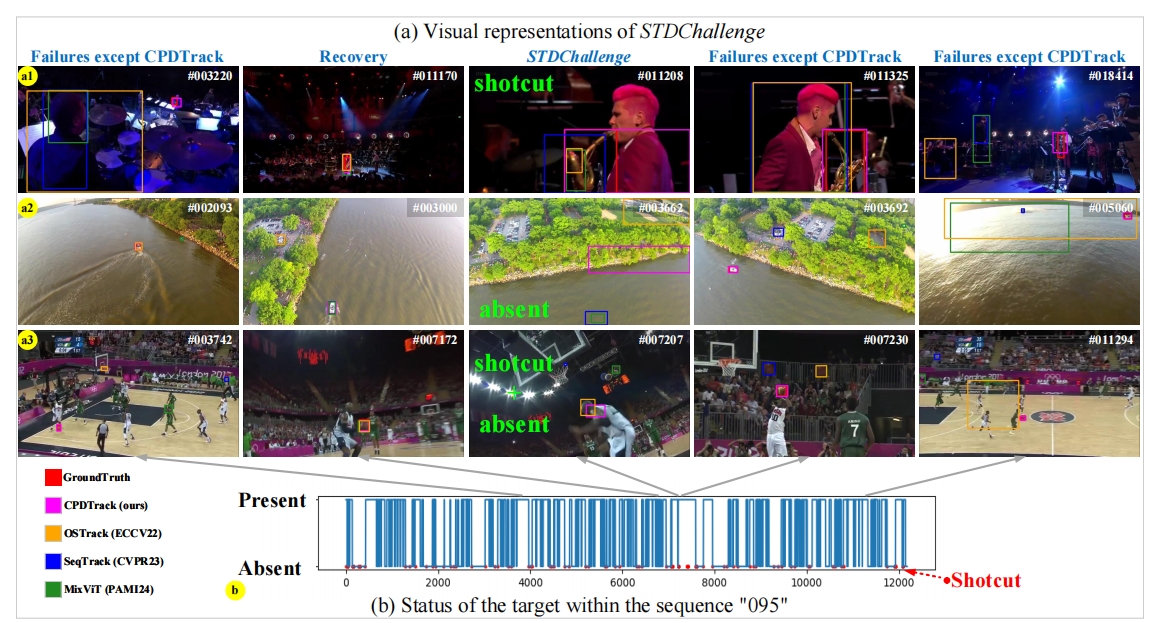

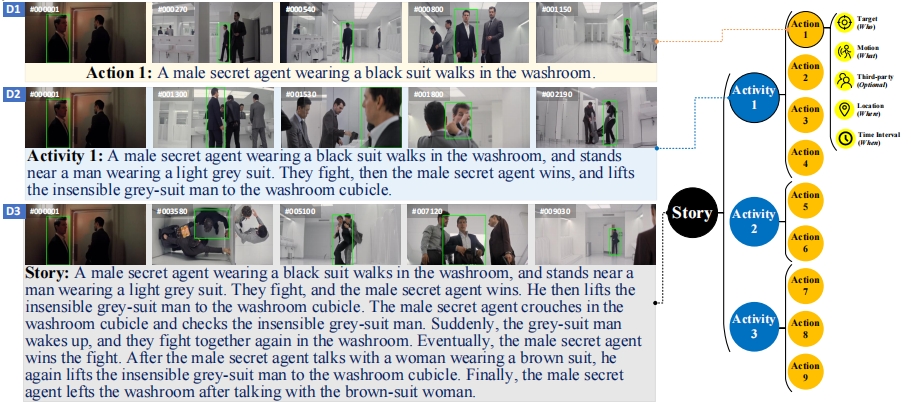

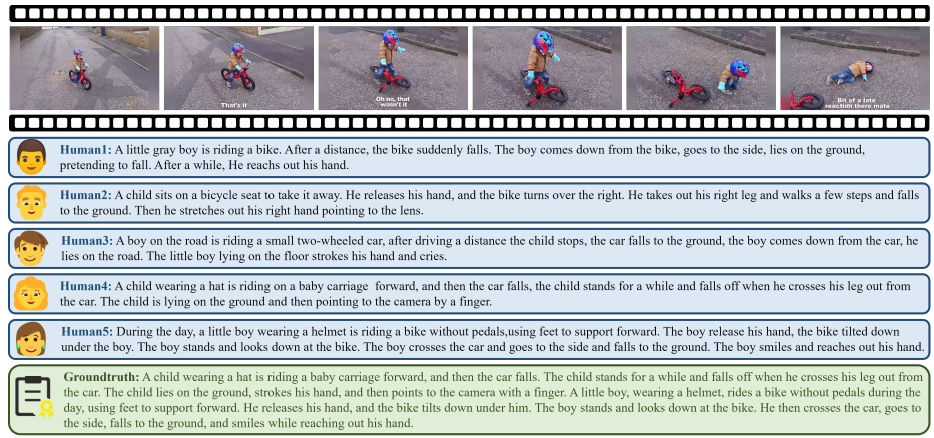

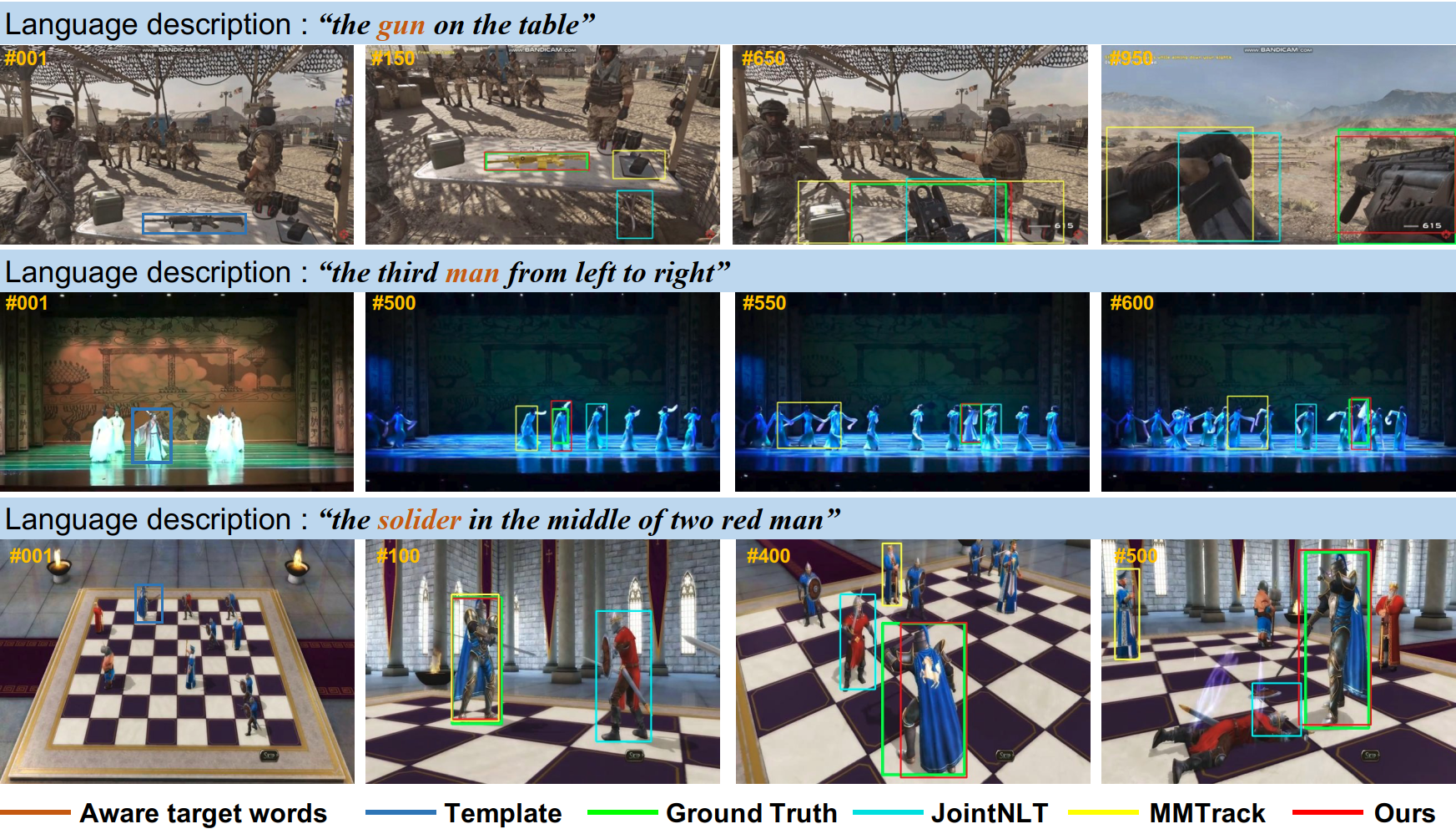

A Multi-modal Global Instance Tracking Benchmark (MGIT): Better Locating Target in Complex Spatio-temporal and Causal Relationship

Shiyu Hu, Dailing Zhang, Xiaokun Feng, Xuchen Li, Xin Zhao, Kaiqi Huang

NeurIPS 2023 (CCF-A Conference): the 37th Conference on Neural Information Processing Systems

[Paper]

[PDF]

[Code]

[Website]

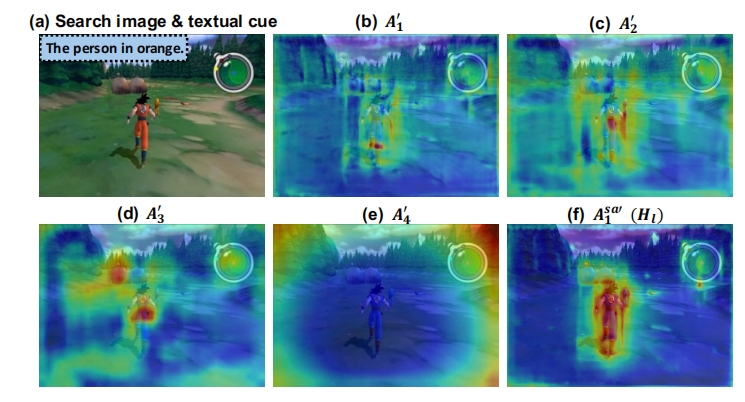

Enhancing Vision-Language Tracking by Effectively Converting Textual Cues into Visual Cues

Xiaokun Feng, Dailing Zhang, Shiyu Hu, Xuchen Li, Meiqi Wu, Jing Zhang, Xiaotang Chen, Kaiqi Huang

ICASSP 2025 (CCF-B Conference): the 50th International Conference on Acoustics, Speech, and Signal Processing

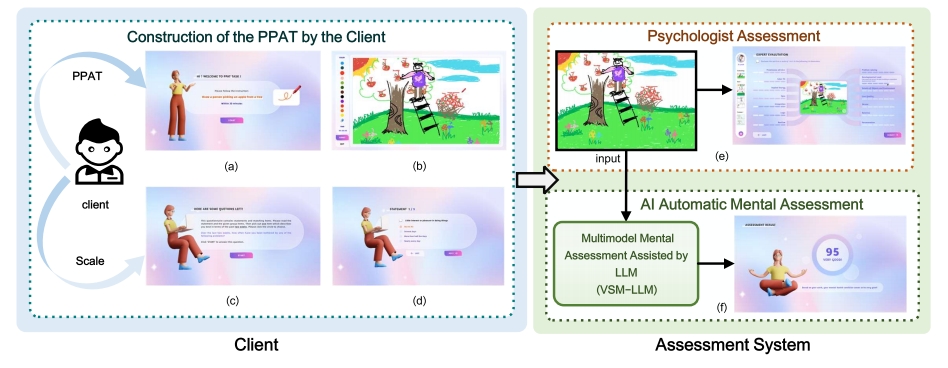

VS-LLM: Visual-Semantic Depression Assessment based on LLM for Drawing Projection Test

Meiqi Wu, Yaxuan Kang, Xuchen Li, Shiyu Hu, Xiaotang Chen, Yunfeng Kang, Weiqiang Wang, Kaiqi Huang

PRCV 2024 (CCF-C Conference): the 7th Chinese Conference on Pattern Recognition and Computer Vision

[Paper]

[PDF]

[Code]

☑️ Ongoing

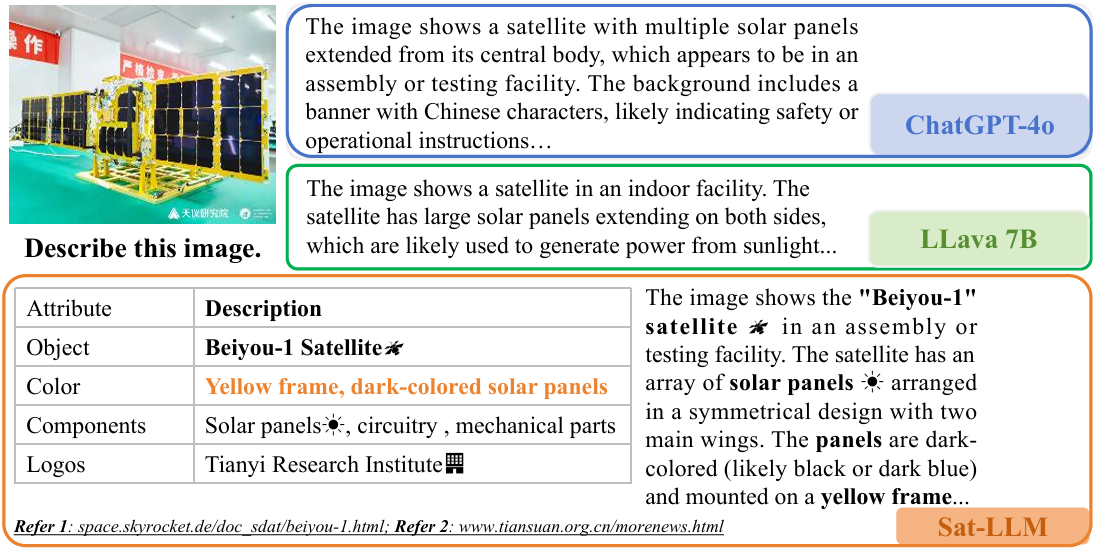

Sat-LLM: Multi-View Retrieval-Augmented Satellite Commonsense Multi-Modal Iterative Alignment LLM

Qian Li*, Xuchen Li*, Zongyu Chang, Yuzheng Zhang, Cheng Ji, Shangguang Wang (*Equal Contributions)

Submitted to a CCF-A conference, Under Review

ATCTrack: Leveraging Aligned Target-Context Cues for Robust Vision-Language Tracking

Xiaokun Feng, Shiyu Hu, Xuchen Li, Dailing Zhang, Meiqi Wu, Jing Zhang, Xiaotang Chen, Kaiqi Huang

Submitted to a CCF-A conference, under review

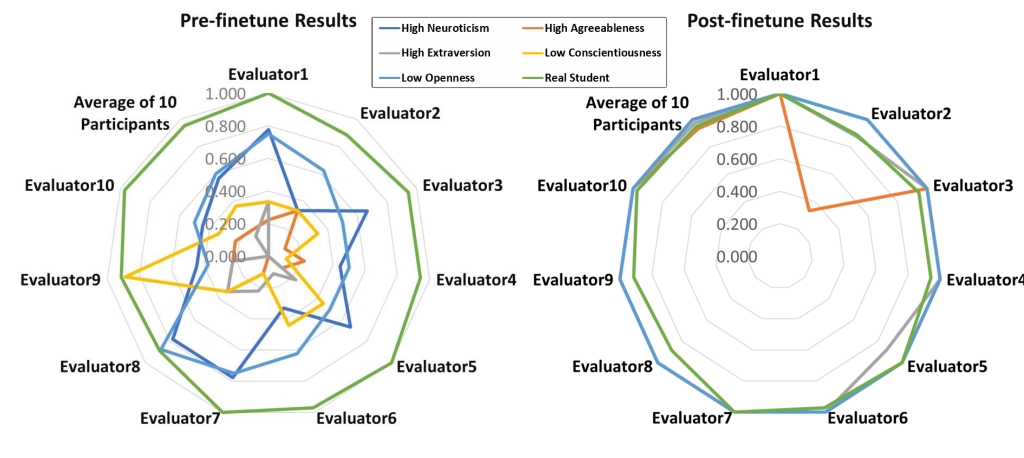

Students Rather Than Experts: A New AI for Education Pipeline to Model More Human-like and Personalised Early Adolescences

Yiping Ma*, Shiyu Hu*, Xuchen Li, Yipei Wang, Shiqing Liu, Kang Hao Cheong (*Equal Contributions)

Submitted to a CAAI-A conference, under review

🏆 Honors

- Best Paper Honorable Mention Award (最佳论文荣誉提名奖), at CVPR Workshop on Vision Datasets Understanding, 2024

- China National Scholarship (国家奖学金), My Rank: 1/455 (0.22%), Top 1%, at BUPT, by Ministry of Education of China, 2023

- China National Scholarship (国家奖学金), My Rank: 2/430 (0.47%), Top 1%, at BUPT, by Ministry of Education of China, 2022

- China National Encouragement Scholarship (国家励志奖学金), My Rank: 8/522 (1.53%), at BUPT, by Ministry of Education of China, 2021

- Huawei AI Education Base Scholarship (华为智能基座奖学金), at BUPT, by Ministry of Education of China and Huawei AI Education Base Joint Working Group, 2022

- Beijing Merit Student (北京市三好学生), Top 1%, at BUPT, by Beijing Municipal Education Commission, 2023

- Beijing Outstanding Graduates (北京市优秀毕业生), Top 5%, at BUPT, by Beijing Municipal Education Commission, 2024

- College Scholarship of University of Chinese Academy of Sciences (中国科学院大学大学生奖学金), at CASIA, by University of Chinese Academy of Sciences, 2023

- Top Ten Classes of University of Chinese Academy of Sciences (中国科学院大学十佳班集体), as class president at UCAS, by University of Chinese Academy of Sciences, 2024

🎤 Talks

- Oral presentation in Seattle WA, USA at CVPR 2024 conference workshop on vision datasets understanding (Slides)

🔗 Services

-

Conference Reviewer

International Conference on Learning Representations (ICLR)

ACM Conference on Human Factors in Computing Systems (CHI)

IEEE Virtual Reality (IEEE VR)

International Conference on Pattern Recognition (ICPR)

International Joint Conference on Neural Networks (IJCNN)

🌟 Projects

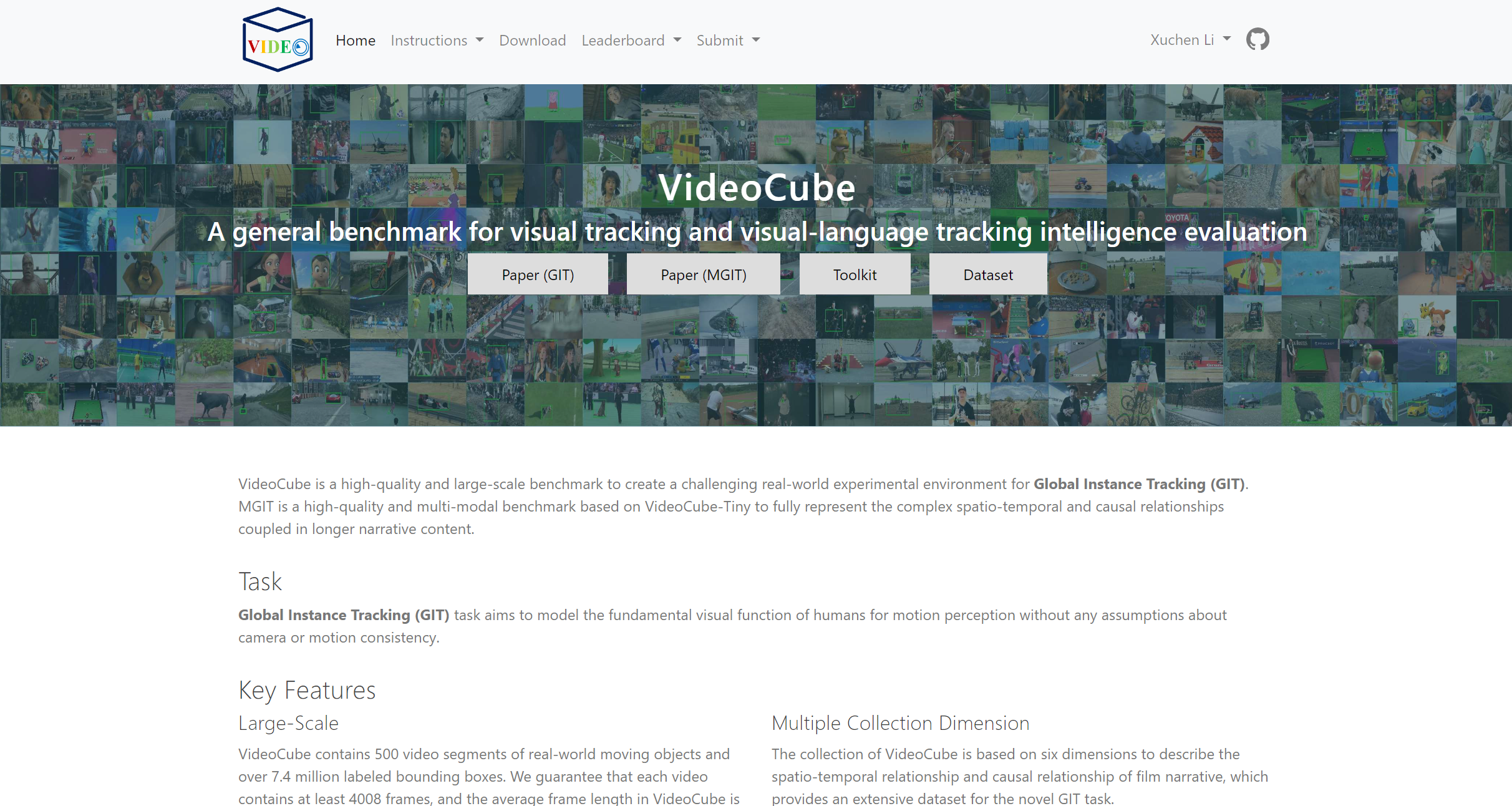

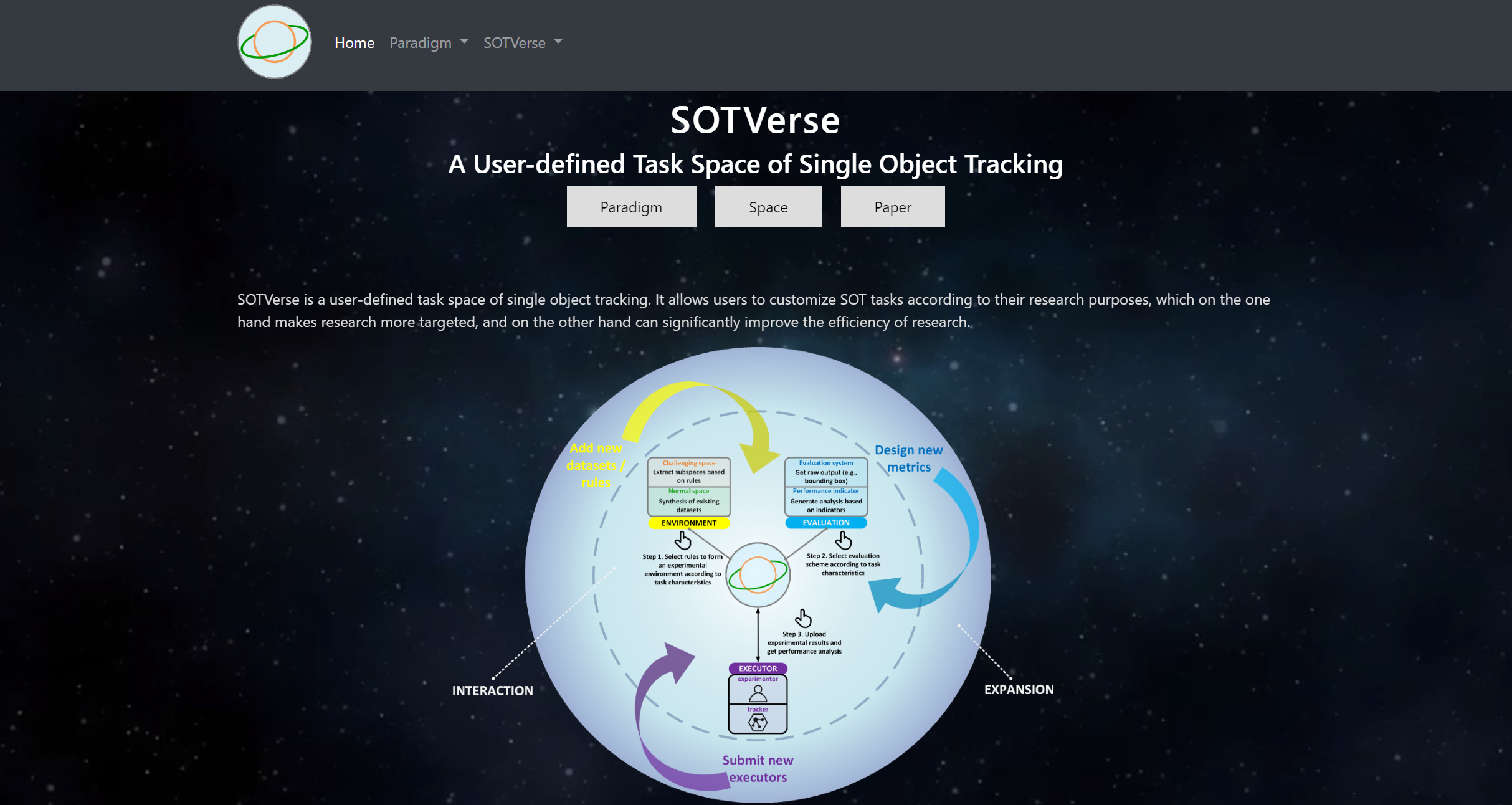

- Visual Object Tracking / Visual Language Tracking / Environment Construction

- As of Sept. 2024, the platform has received 440k+ page views, 1.2k+ downloads, 420+ trackers from 220+ countries and regions worldwide.

- VideoCube / MGIT is the supporting platform for research accepted by IEEE TPAMI 2023 and NeurIPS 2023.

- Visual Object Tracking / Environment Construction / Evaluation Technique

- As of Sept. 2024, the platform has received 126k+ page views from 150+ countries and regions worldwide.

- SOTVerse is the supporting platform for research accepted by IJCV 2024.

GOT-10k: A Large High-diversity Benchmark and Evaluation Platform for Single Object Tracking

- Visual Object Tracking / Environment Construction / Evaluation Techniquebr>

- As of Sept. 2024, the platform has received 3.92M+ page views, 7.5k+ downloads, 21.5k+ trackers from 290+ countries and regions worldwide.

- GOT-10k is the supporting platform for research accepted by IEEE TPAMI 2021.

BioDrone: A Bionic Drone-based Single Object Tracking Benchmark for Robust Vision

- UAV Tracking / Environment Construction / Evaluation Technique

- As of Sept. 2024, the platform has received 170k+ page views from 200+ countries and regions worldwide.

- BioDrone is the supporting platform for research accepted by IJCV 2024.

© Xuchen Li | Last updated: 2024.12